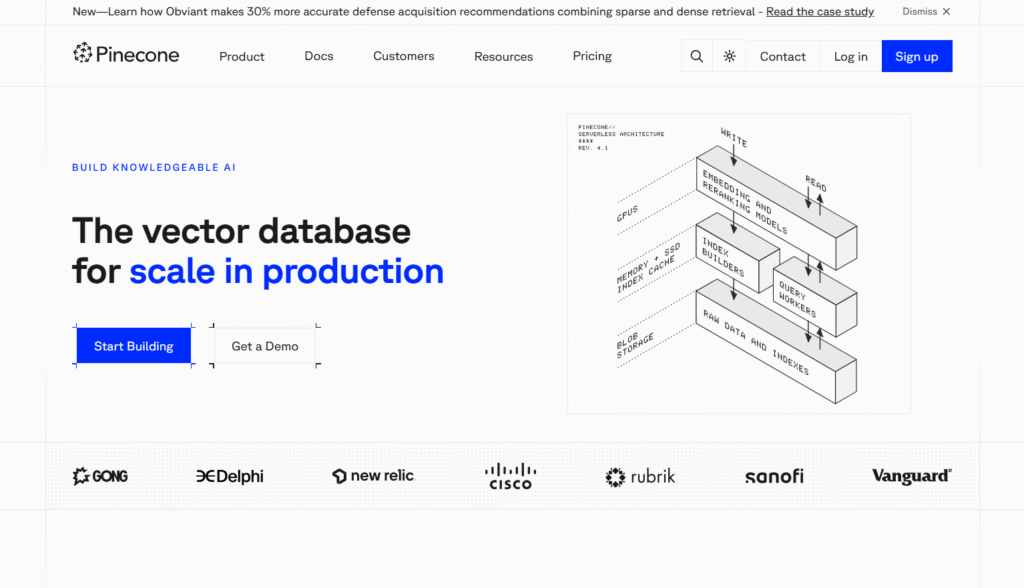

As artificial intelligence advances, the need to process and retrieve information based on meaning not just keywords has become crucial. This is where vector search comes in. Traditional databases struggle with semantic queries, but Pinecone, a managed vector database, is purpose-built to handle this challenge efficiently.

In this blog, we’ll break down what Pinecone is, how vector search works, and why it’s vital for modern AI applications.

What is Pinecone?

Pinecone is a fully managed vector database that allows developers to store, index, and query high-dimensional vector embeddings at scale. In simple terms, it lets AI applications retrieve information based on similarity of meaning, not exact match.

Whether you’re building a semantic search engine, a recommendation system, or giving your LLM (like ChatGPT) long-term memory, Pinecone provides the backend infrastructure needed to search massive datasets in milliseconds.

How Vector Search Works

In AI, data like text, images, or videos is converted into vector embeddings numerical representations in multi-dimensional space. Instead of keyword matching, vector search compares these embeddings using cosine similarity or Euclidean distance to find the closest matches.

Example:

If you search for “healthy recipes,” vector search can return results like “low-calorie meals” or “vegetarian dishes” even if those exact words weren’t in your query.

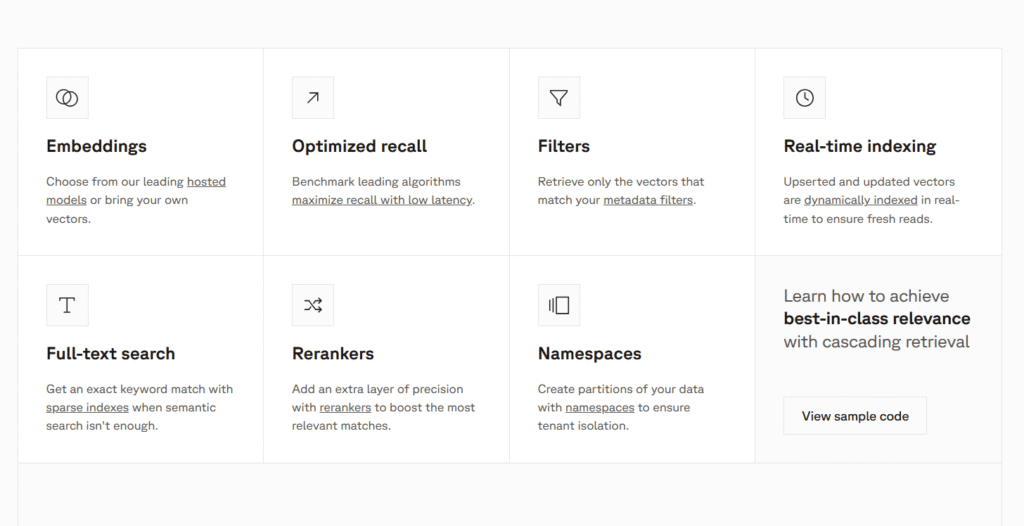

Key Features of Pinecone

- Scalability

Handles billions of vectors with real-time search capability. - Speed

Delivers sub-second response times, even with large datasets. - Managed Infrastructure

No need to worry about servers, indexing, or scaling it’s fully managed. - Integration with AI Models

Works seamlessly with tools like OpenAI, Hugging Face, and LangChain to power semantic applications. - Filtering and Metadata Support

You can filter results using metadata like tags, categories, or user preferences.

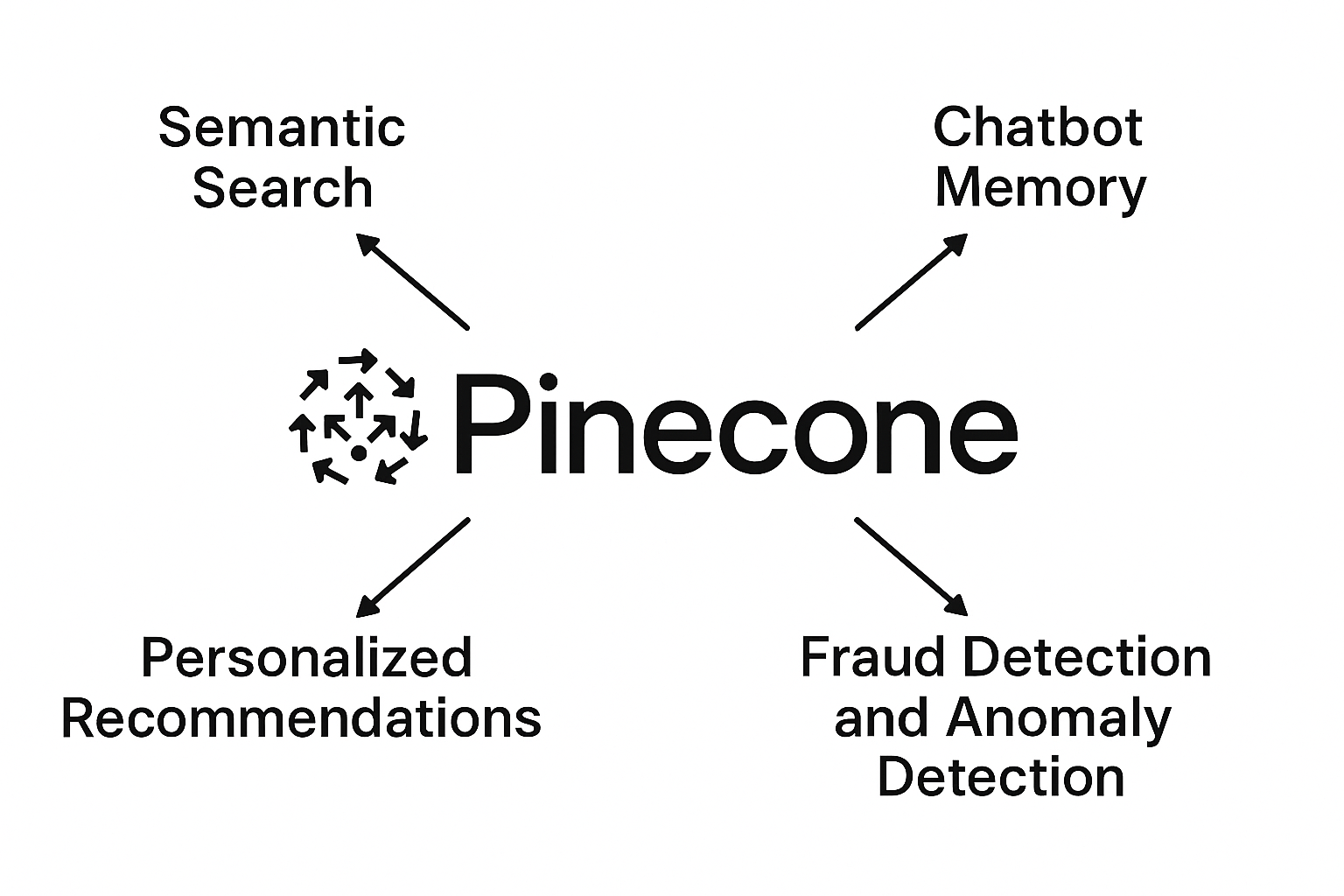

Use Cases in AI

- Semantic Search

Improve search relevance in ecommerce, customer support, or content discovery. - Chatbot Memory

Add long-term memory to LLMs by storing past interactions as vectors. - Personalized Recommendations

Deliver user-specific content based on preference embeddings. - Fraud Detection and Anomaly Detection

Spot unusual patterns in vector data using proximity-based analysis.

Why Pinecone Over Other Tools?

Unlike general-purpose databases or DIY vector indexing, Pinecone is optimized specifically for real-time, high-dimensional similarity search. It handles everything from indexing and sharding to consistency and uptime so developers can focus on building, not maintaining.

Final Thoughts

In a world where understanding context and meaning is everything, vector search is no longer optional it’s essential. And Pinecone is leading the charge in making this technology scalable, fast, and accessible.

Whether you’re working on an AI assistant, recommendation engine, or search tool, Pinecone gives your application the brainpower it needs to think like a human by understanding meaning.